Note: The directions at "http://wiki.openvz.org/Install_OpenVZ_on_a_x86_64_system_Centos-Fedora#STEP_12" did not quite work for me as ".gpgkeyschecked.yum" gets created in the yum-cache directory as well and is not available to the containers. The workaround below worked for me.

To share the vzyum cache directory between various containers. Edit "/etc/auto.master" to include the following:

/vz/root/{vpsid}/var/cache/yum-cache /etc/auto.vzyum

Include one line for each installed or planned VPS, replacing {vpsid} with the adequate value.

Then, create "/etc/auto.vzyum" file with only this line:

share -bind,ro,nosuid,nodev :/var/cache/yum-cache/share

Restart the automounter daemon.

Edit "/vz/template/centos/5/x86_64/config/yum.conf" and change cachedir location:

cachedir=/var/cache/yum-cache/share

Create the corresponding cachedir:

mkdir /var/cache/yum-cache/share

Test with:

vzyum {vpsid} clean all

This should create all of the yum cache directory at "/var/cache/yum-cache/share" location and should be available to the openvz container via bind mount.

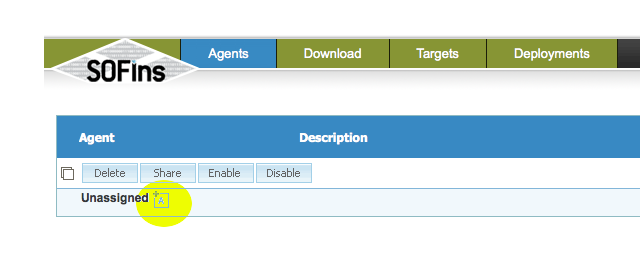

Select the checkbox besides the new agent that is created and click on the "Share" button.

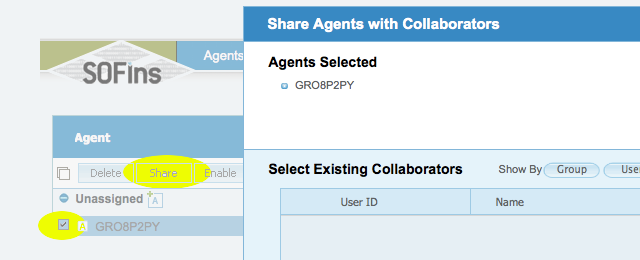

Select the checkbox besides the new agent that is created and click on the "Share" button. Enter email address of person to share the agent with and select "Offer assistance" tab and click "OK".

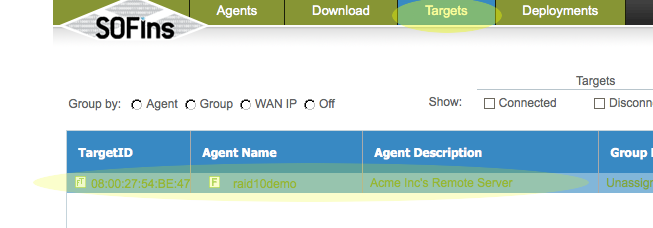

Enter email address of person to share the agent with and select "Offer assistance" tab and click "OK". In The "Targets" page, click on the "Authorize Access" tab to "Enable" access and get the required ssh credentials to log in to the remote server via ssh.

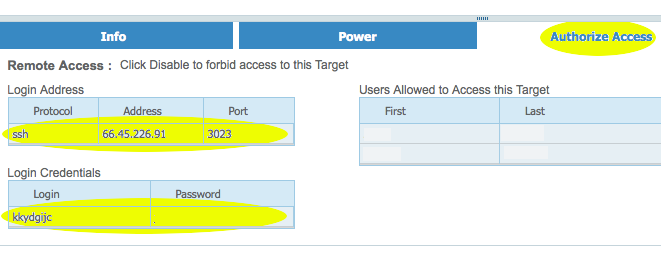

In The "Targets" page, click on the "Authorize Access" tab to "Enable" access and get the required ssh credentials to log in to the remote server via ssh. Login via ssh to the IP and port specified on the "Access" page.

Login via ssh to the IP and port specified on the "Access" page.